What Happens in Crowd Scenes: A New Dataset about Crowd Scenes for Image Captioning

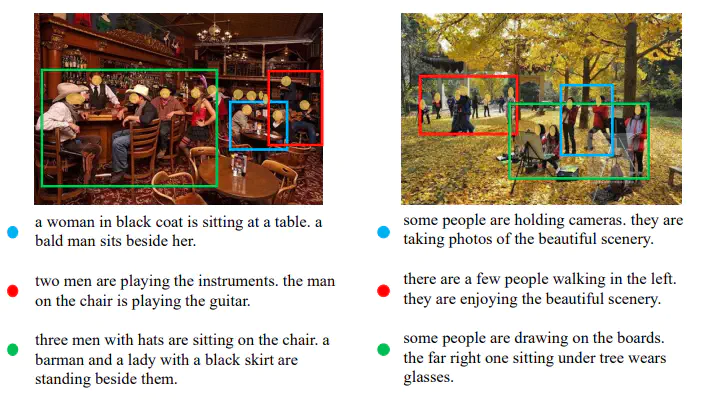

CrowdCaption Dataset

CrowdCaption is a new challenging image captioning dataset for complex real-world crowd scene understanding, which towards to describe crowd scene. Our dataset has the advantages of crowd-topic scenes, comprehensive and complex caption descriptions, typical relationships and detailed grounding annotations. The complexity and diversity of the descriptions and the specificity of the crowd scenes make this dataset extremely challenging. This dataset contains 43,306 captions for 21,794 regions with bounding boxes on 11161 images. There are 7,161 images for training, 1,000 images for validation and 3,000 images for testing, respectively.

Download CrowdCaption Dataset

To ensure the rational use of CrowdCaption dataset, researchers requires to sign CrowdCaption Terms of Use as restrictions on access to dataset to privacy protection and use dataset for non-commercial research and/or educational purposes. If you have recieved access, you can download and extract our CrowdCaption Dataset.

The directory structure of the dataset is as follows:

CrowdCaption/

├── crowdcaption_images.zip

│

└── crowdcaption.json

│

└── features_fasterrcnn/

├── part1.zip/

└── part2.zip/

│

└── features_hrnet_keypoints

├── part01.zip/

├── ...

└── part09.zip/

Annotations

In CrowdCaption dataset, each image may contains multiple caption annotations, and each caption annotations has a detailed region grounding annotation. The location can be denoted as (x, y, w, h), where (x, y) denotes the top left of the region, w and h denote its width and height. The dataset annotations are provided in JSON format. Researchers can read the annotation files by the following Python 3 code:

import json

path='./crowdcaption.json'

info_dict=json.load(open(path))['images']

image_name=info_dict['filename'] # image name

split=info_dict['split'] # image split in train, val or test

image_id=info_dict['imgid'] # image id

human_position=info_dict['box'] # single person localization annotations [x, y, w, h]

sentence_id=info_dict['dense_caption']['sentids'] # sentence id

region_id=info_dict['dense_caption']['region_ids'] # region id

sentences=info_dict['dense_caption']['sentences'] # all descriptions for each images

region_position=info_dict['dense_caption']['union_boxes'] # crowd region localization annotations [x, y, w, h] for each images

human_in_region_idx=info_dict['dense_caption']['sentences_region_idx'] # the human idx for who are in the corresponding region

If you have any question, please concat us ivipclab539@126.com.

Citation

If you find CrowdCaption useful in your research, please consider citing:

@inproceedings{wang2021what,

title={What Happens in Crowd Scenes: A New Dataset about Crowd Scenes for Image Captioning},

author={Wang, Lanxiao and Li, Hongliang and Wen, Zhehu, Zhang, Xiaoliang and Qiu, Heqian,

Meng, Fanman and Wu, Qingbo},

booktitle={IEEE Transactions on Multimedia},

pages={0--0},

year={2022}

}