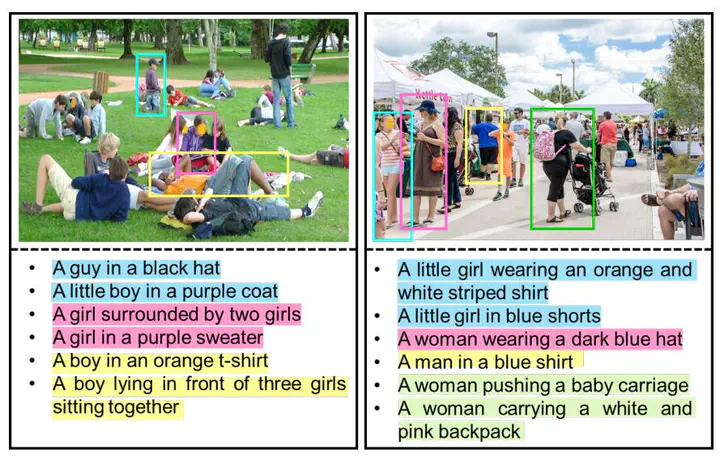

RefCrowd Dataset

RefCrowd is a new challenging referring comprehension dataset for complex real-world crowd grounding, which towards looking for the target person in crowd with referring expressions. Our dataset contains a crowd of persons some of whom share similar visual appearance, and diverse natural languages covering unique properties of the target person. It not only requires to sufficiently mine and understand natural language information, but also requires to carefully focus on subtle differences between persons in an image, so as to realize fine-grained mapping from language to vision.

This dataset contains 75,763 expressions for 37,999 queried persons with bounding boxes on 10,702 images. There are 6,885 images with 48,509 expressions for training, 1,260 images with 9,074 expressions for validation and 2,557 images with 18,180 expressions for testing, respectively.

Download RefCrowd Dataset

To ensure the rational use of RefCrowd dataset, researchers requires to sign RefCrowd Terms of Use as restrictions on access to dataset to privacy protection and use dataset for non-commercial research and/or educational purposes.

If you have recieved access, you can download and extract our RefCrowd Dataset.

The directory structure of the dataset is as follows:

RefCrowd/

├── images/

│ ├── train/

│ ├── val/

│ └── test/

│

└── annotations/

├── train.json

├── val.json

└── test.json

Annotations

In RefCrowd dataset, each image may contains multiple persons, and each person can be described by multiple expressions/sentences. The person location can be denoted as (x_c, y_c, w, h), where (x_c, y_c) denotes the center coordinates of a person, w and h denote its width and height.

The dataset annotations are provided in JSON format. Researchers can read the annotation files by the following Python 3 code:

import json

info_dict=json.load(open(ann_file,'r'))

Images=info_dict['Imgs'] # image annotations

Anns=info_dict['Anns'] # person localization annotations

Refs=info_dict['Refs'] # all expressions for each person annotations

Sents=info_dict['Sents'] # each experssion annotations

Cats=info_dict['Cats'] # only include the person catgory

att_to_ix=info_dict['att_to_ix'] # all attribute categories

att_to_cnt=info_dict['att_to_cnt'] # the number of each attribution in RefCrowd

Evaluation

We calculate the intersection-over-union (IoU) between the predicted bounding box and ground-truth one to measure whether the prediction is correct. A predicted bounding is treated as correct if IoU is higher than desired IoU threshold. Instead of using single IoU threshold 0.5, we adopt the mean accuracy mAcc to measure the localization performance of method which averages the accuracy over IoU thresholds from loose 0.5 to strict 0.95 with interval 0.05 similar to popular COCO metrics. mAcc is a comprehensive indicator for widely real-world applications.

For convenience, researchers can refer to the code (refcrowd.py) provided by us for reading annotations and evaluation method.

If you have any question, please concat us (hqqiu@std.uestc.edu.cn).

Citation

If you find RefCrowd useful in your research, please consider citing:

@article{qiu2022refcrowd,

title={RefCrowd: Grounding the Target in Crowd with Referring Expressions},

author={Qiu, Heqian and Li, Hongliang and Zhao, Taijin and Wang, Lanxiao and Wu, Qingbo and Meng, Fanman},

journal={arXiv preprint arXiv:2206.08172},

year={2022}

}