Towards Continual Egocentric Activity Recognition: A Multi-modal Egocentric Activity Dataset for Continual Learning

UESTC-MMEA-CL Dataset

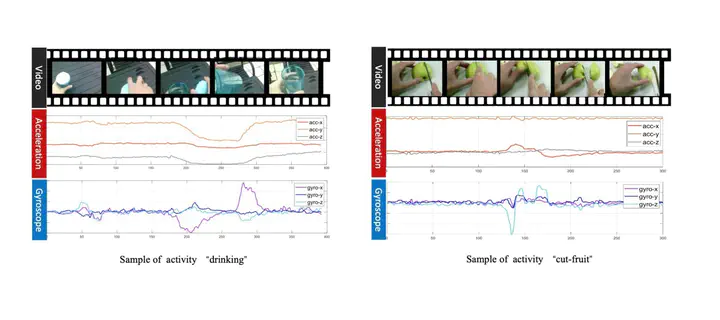

UESTC-MMEA-CL is a new multi-modal activity dataset for continual egocentric activity recognition, which is proposed to promote future studies on continual learning for first-person activity recognition in wearable applications. Our dataset provides not only vision data with auxiliary inertial sensor data but also comprehensive and complex daily activity categories for the purpose of continual learning research. UESTC-MMEA-CL comprises 30.4 hours of fully synchronized first-person video clips, acceleration stream and gyroscope data in total. There are 32 activity classes in the dataset and each class contains approximately 200 samples. We divide the samples of each class into the training set, validation set and test set according to the ratio of 7:2:1.

|

|

| Cut fruits | Fall |

Continual Egocentric Activity Recongnition

For the continual learning evaluation, we present three settings of incremental steps, i.e., the 32 classes are divided into {16, 8, 4} incremental steps and each step contains {2, 4, 8} activity classes, respectively.

Download UESTC-MMEA-CL Dataset

Usage License: All videos and data in UESTC-MMEA-CL

Download: UESTC-MMEA-CL

The directory structure of the dataset is as follows:

UESTC-MMEA-CL/

├── train.txt

├── val.txt

├── test.txt

├── video/

│ ├── 1_upstairs/

│ ├── ...

│ └── 32_watch_TV/

└── sensor/

├── 1_upstairs/

├── ...

└── 32_watch_TV/

Note for data files

Video data is in the path:‘./video/[class_number]_[class_name]/xx.mp4.’

eg: ./video/1_upstairs/1_upstairs_2020_12_15_15_58_48.mp4

Sensor data contains acceleration and gyro data, put in the path: ‘./sensor/[class_number]_[class_name]/xx.csv.’

eg: ./sensor/1_upstairs/1_upstairs_2020_12_15_15_58_48.csv

The same prefix name represents the data of the same sample. For the details of the sensor data .csv file, the first three columns represent the raw sensing data of the acceleration x, y, and z axes, and the last three columns represent the raw sensing data of the gyroscope’s x, y, and z axes. We provide our sensitivity factors in the acquisition settings: Racc = 16384 and Rgyro = 16.4. You can get the real acceleration value (g) and gyroscope angular velocity value (degree/s) by dividing the raw data by the sensitivity coefficient.

For convenience, researchers can refer to this code for the usages and evaluation on this dataset.

If you have any questions, please contact us.

Citation

If you find UESTC-MMEA-CL useful in your research, please consider citing:

@ARTICLE{10184468,

author={Xu, Linfeng and Wu, Qingbo and Pan, Lili and Meng, Fanman and Li, Hongliang and He, Chiyuan and Wang, Hanxin and Cheng, Shaoxu and Dai, Yu},

journal={IEEE Transactions on Multimedia},

title={Towards Continual Egocentric Activity Recognition: A Multi-Modal Egocentric Activity Dataset for Continual Learning},

year={2024},

volume={26},

number={},

pages={2430-2443},

doi={10.1109/TMM.2023.3295899}}

}